-

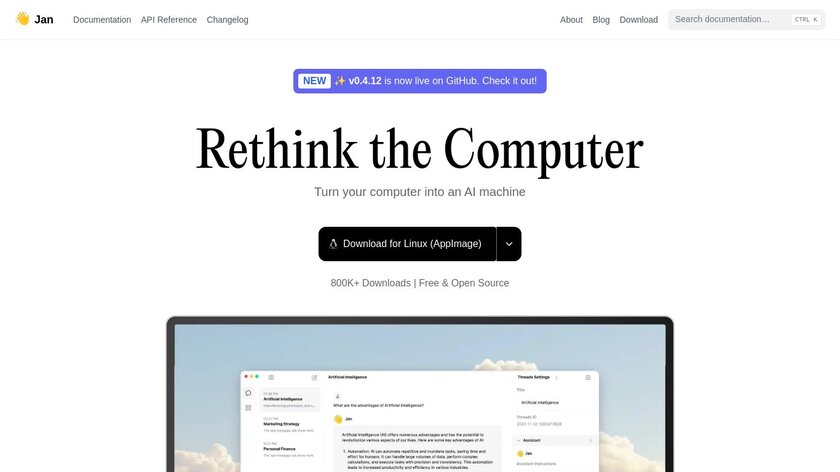

Run LLMs like Mistral or Llama2 locally and offline on your computer, or connect to remote AI APIs like OpenAI’s GPT-4 or Groq.Pricing:

- Open Source

#LLM #Chat GPT #AI 11 social mentions

-

Use Local AI as Daily Driver

I have been using local LLM as a daily driver. Built https://recurse.chat for it.

#AI #Productivity #AI Tools 15 social mentions

Discuss: Ask HN: Which LLMs can run locally on most consumer computers

Related Posts

Chat Gpt (Sep 11)

saashub.com // 21 days ago

Ai Image Generator (Aug 23)

saashub.com // about 1 month ago

Ai (Jul 31)

saashub.com // 2 months ago

Education & Reference (Jul 26)

saashub.com // 2 months ago

Top Sites Like Janitor AI in 2025

scrile.com // 3 months ago

Best InVideo AI Alternatives for Faceless YouTube Automation (2025)

videobytes.ai // 5 months ago