-

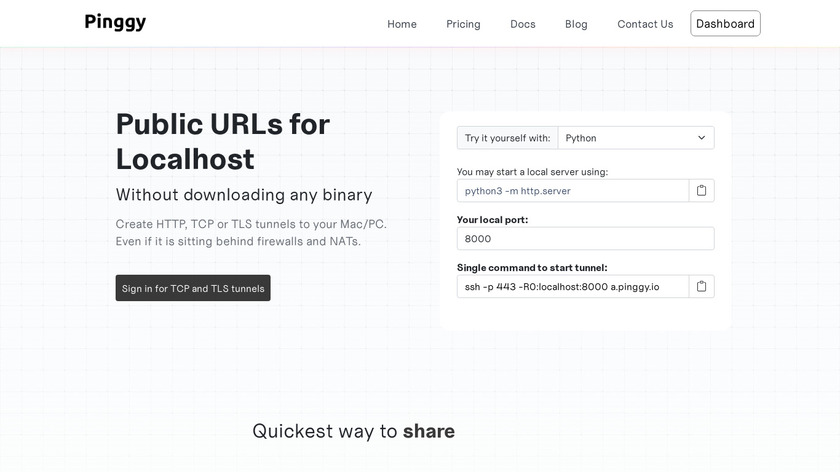

Public URLs for localhost without downloading any binaryPricing:

- Freemium

- Free Trial

- $2.5 / Monthly (Pro, 1 tunnel, HTTP, TCP, and TLS tunnel, Custom Domain)

As generative AI adoption grows, developers increasingly seek ways to self-host large language models (LLMs) for enhanced control over data privacy and model customization. OpenLLM is an excellent framework for deploying models like Llama 3 and Mistral locally, but exposing them over the internet can be challenging. Enter Pinggy, a tunneling solution that allows secure remote access to self-hosted LLM APIs without complex infrastructure.

#Localhost Tools #Testing #Webhooks 134 social mentions

-

Frontier AI in your handsPricing:

- Open Source

As generative AI adoption grows, developers increasingly seek ways to self-host large language models (LLMs) for enhanced control over data privacy and model customization. OpenLLM is an excellent framework for deploying models like Llama 3 and Mistral locally, but exposing them over the internet can be challenging. Enter Pinggy, a tunneling solution that allows secure remote access to self-hosted LLM APIs without complex infrastructure.

#AI #AI Tools #AI API 27 social mentions

Discuss: How to Easily Share OpenLLM API Online

Related Posts

Ai Image Generator (Aug 23)

saashub.com // about 1 month ago

Ai (Jul 31)

saashub.com // 2 months ago

Education & Reference (Jul 26)

saashub.com // 2 months ago

Top Sites Like Janitor AI in 2025

scrile.com // 3 months ago

Other alternatives to Tuskr

testpad.com // 4 months ago

Best InVideo AI Alternatives for Faceless YouTube Automation (2025)

videobytes.ai // 5 months ago