-

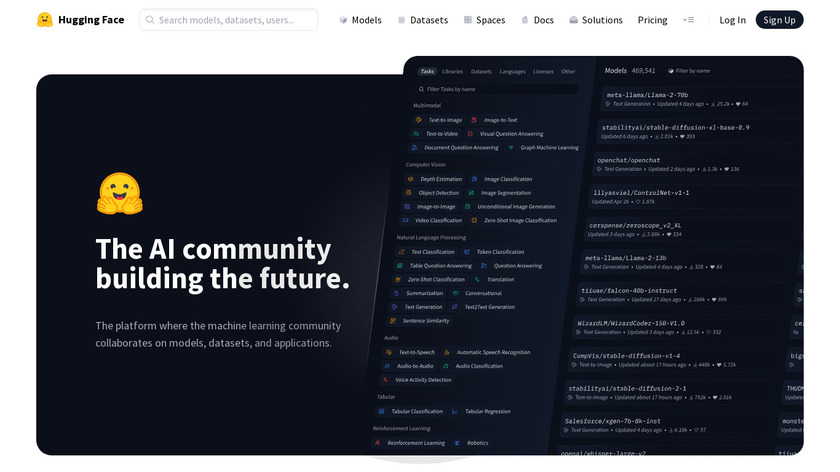

The AI community building the future. The platform where the machine learning community collaborates on models, datasets, and applications.

To be able to use the Gemma 2 model, you first need a Hugging Face account. Start by creating one if you don't already have one, and create a token key with read permissions from your settings page. Make sure to note down the token value, which we'll need in a bit.

#AI #Social & Communications #Chatbots 306 social mentions

-

Gain visibility into the performance, uptime, and overall health of cloud-powered apps on Google Cloud and other cloud or on-premises environments.

Monitoring: Use Cloud Monitoring and Cloud Logging to track the performance of both Gemma and your LangChain application. Look for error rates, latency, and resource utilization.

#Dev Ops #Monitoring #Cloud Monitoring 7 social mentions

-

Introducing Gemma, a family of open-source, lightweight language models. Discover quickstart guides, benchmarks, train and deploy on Google Cloud, and join the community to advance AI research.Pricing:

- Open Source

In my previous posts, we explored how LangChain simplifies AI application development and how to deploy Gemini-powered LangChain applications on GKE. Now, let's take a look at a slightly different approach: running your own instance of Gemma, Google's open large language model, directly within your GKE cluster and integrating it with LangChain.

#AI #Writing Tools #Productivity 9 social mentions

Discuss: Leverage open models like Gemma 2 on GKE with LangChain

Related Posts

Ai Image Generator (Aug 23)

saashub.com // about 1 month ago

Ai (Jul 31)

saashub.com // 2 months ago

ManyChat Alternatives in 2025: Why Release0 is the Smarter Choice for AI Chatbots & Automation

release0.com // 6 months ago

Education & Reference (Jul 26)

saashub.com // 2 months ago

Top Sites Like Janitor AI in 2025

scrile.com // 3 months ago

Best InVideo AI Alternatives for Faceless YouTube Automation (2025)

videobytes.ai // 5 months ago