Table of contents

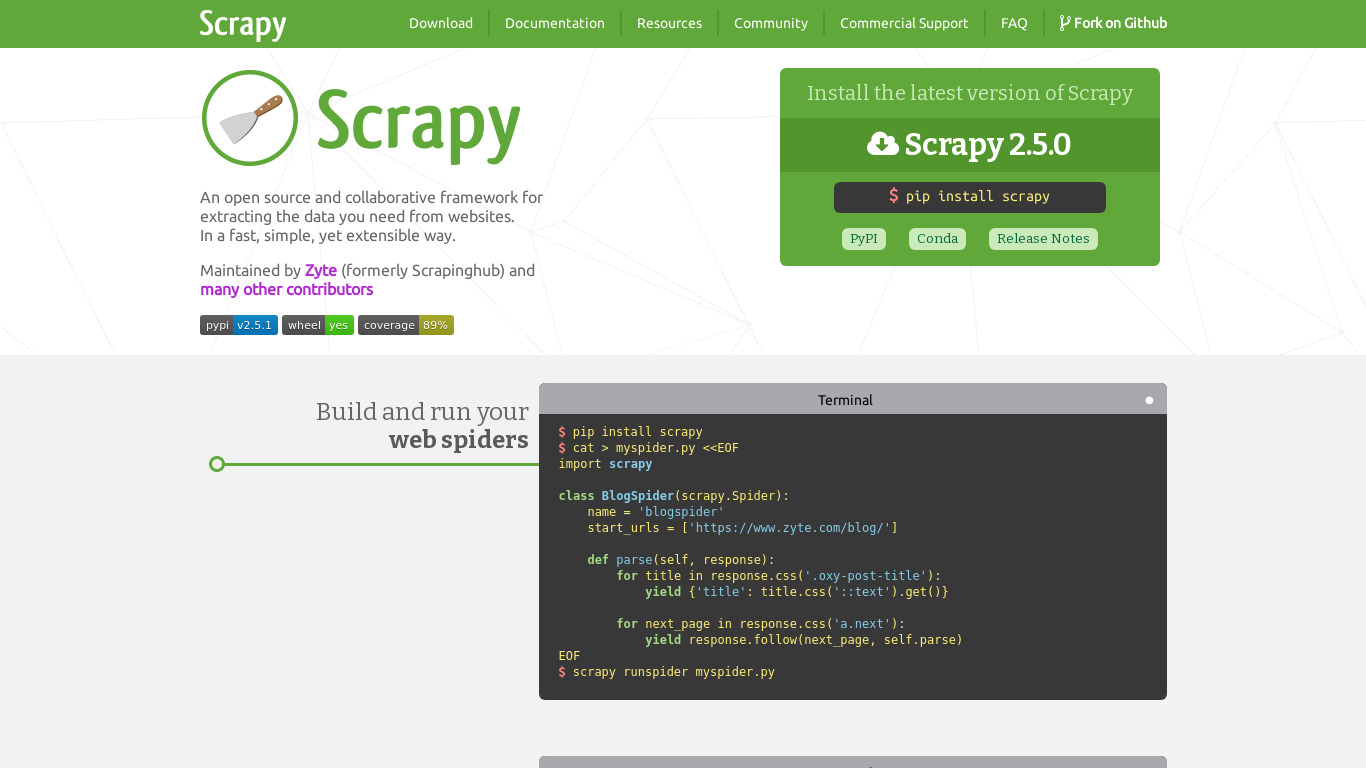

Scrapy

Scrapy | A Fast and Powerful Scraping and Web Crawling Framework subtitle

As Scrapy is an open source project, you can find more

open source alternatives and stats

on LibHunt.

Pricing:

- Open Source