Table of contents

XGBoost

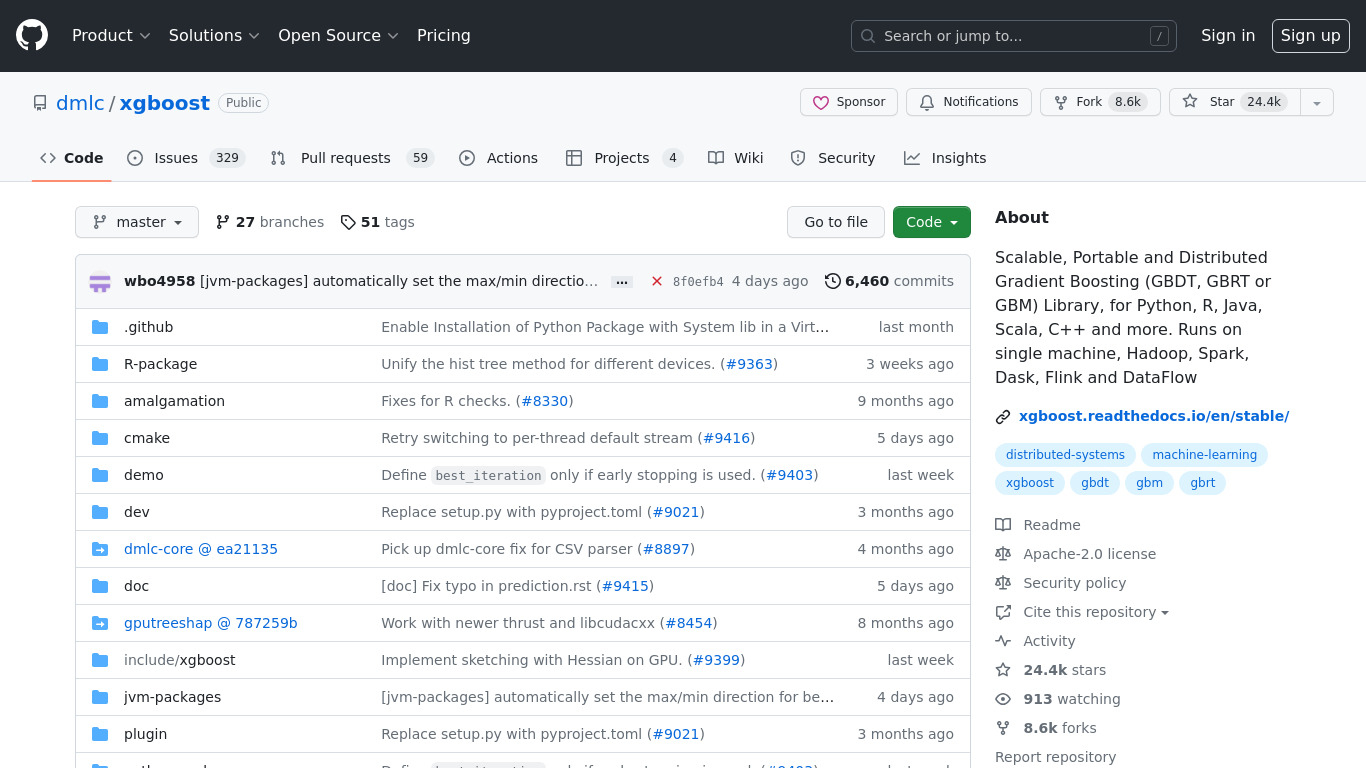

XGBoost is an optimized distributed gradient boosting library designed to be highly efficient, flexible and portable. subtitle

As XGBoost is an open source project, you can find more

open source alternatives and stats

on LibHunt.

Pricing:

- Open Source