-

Apache Spark is an engine for big data processing, with built-in modules for streaming, SQL, machine learning and graph processing.Pricing:

- Open Source

There are different ways to implement parallel dataflows, such as using parallel data processing frameworks like Apache Hadoop, Apache Spark, and Apache Flink, or using cloud-based services like Amazon EMR and Google Cloud Dataflow. It is also possible to use parallel dataflow frameworks to handle big data and distributed computing, like Apache Nifi and Apache Kafka.

#Databases #Big Data #Big Data Analytics 56 social mentions

-

Open-source software for reliable, scalable, distributed computingPricing:

- Open Source

There are different ways to implement parallel dataflows, such as using parallel data processing frameworks like Apache Hadoop, Apache Spark, and Apache Flink, or using cloud-based services like Amazon EMR and Google Cloud Dataflow. It is also possible to use parallel dataflow frameworks to handle big data and distributed computing, like Apache Nifi and Apache Kafka.

#Databases #NoSQL Databases #Big Data 15 social mentions

-

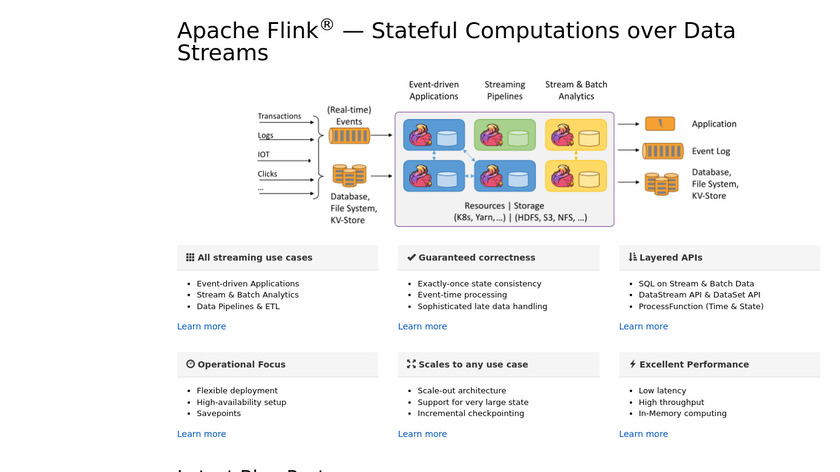

Flink is a streaming dataflow engine that provides data distribution, communication, and fault tolerance for distributed computations.Pricing:

- Open Source

There are different ways to implement parallel dataflows, such as using parallel data processing frameworks like Apache Hadoop, Apache Spark, and Apache Flink, or using cloud-based services like Amazon EMR and Google Cloud Dataflow. It is also possible to use parallel dataflow frameworks to handle big data and distributed computing, like Apache Nifi and Apache Kafka.

#Stream Processing #Big Data #Developer Tools 28 social mentions

-

Amazon Elastic MapReduce is a web service that makes it easy to quickly process vast amounts of data.

There are different ways to implement parallel dataflows, such as using parallel data processing frameworks like Apache Hadoop, Apache Spark, and Apache Flink, or using cloud-based services like Amazon EMR and Google Cloud Dataflow. It is also possible to use parallel dataflow frameworks to handle big data and distributed computing, like Apache Nifi and Apache Kafka.

#Big Data #Big Data Tools #Big Data Infrastructure 10 social mentions

Discuss: 5 Best Practices For Data Integration To Boost ROI And Efficiency

Related Posts

14 Websites to Download Research Paper for Free – 2024

ilovephd.com // 2 months ago

IMDb Alternatives

tutorialspoint.com // 10 months ago

Log analysis: Elasticsearch vs Apache Doris

doris.apache.org // 8 months ago

Rockset, ClickHouse, Apache Druid, or Apache Pinot? Which is the best database for customer-facing analytics?

embeddable.com // 6 months ago

ReductStore vs. MinIO & InfluxDB on LTE Network: Who Really Wins the Speed Race?

reduct.store // 8 months ago

KeyDB: A Multithreaded Redis Fork | Hacker News

news.ycombinator.com // about 5 years ago