-

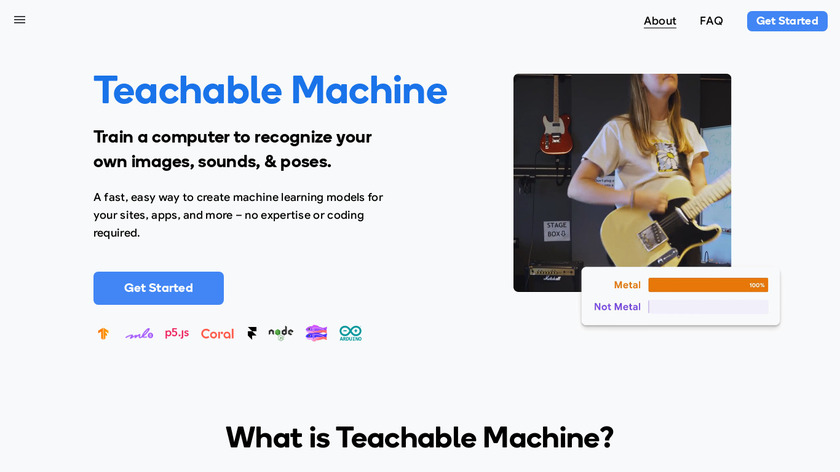

Easily create machine learning models for your apps, no coding required.Pricing:

- Open Source

#Data Dashboard #Data Science And Machine Learning #AI 55 social mentions

-

You no longer need to collect and label images or train a ML model to add computer vision to your project.Pricing:

- Open Source

Npx @roboflow/inference-server Then you can POST an image at any of the models to localhost:9001 eg base64 yourImage.jpg | curl -d @- "http://localhost:9001/some-model/1?api_key=xxxx" And you get back JSON predictions. There are also client libs[2] and sample code[3] for pretty much any language you might want to use it in. You can also run any of the models directly in a browser with WebGL[4], in a native mobile app[5], or on an edge device[6]. [1] https://universe.roboflow.com [2] https://github.com/roboflow-ai/roboflow-python [3] https://github.com/roboflow-ai/roboflow-api-snippets [4] https://docs.roboflow.com/inference/web-browser [5] https://docs.roboflow.com/inference/mobile-ios-on-device [6] eg https://docs.roboflow.com/inference/luxonis-oak.

#APIs #Software Engineering #Developer Tools 18 social mentions

-

Vector database built for scalable similarity search Open-source, highly scalable, and blazing fast.Pricing:

- Open Source

- Free

Usually this is done in three steps. The first step is using a neural network to create a bounding box around the object, then generating vector embeddings of the object, and then using similarity search on vector embeddings. The first step is accomplished by training a detection model to generate the bounding box around your object, this can usually be done by finetuning an already trained detection model. For this step the data you would need is all the images of the object you have with a bounding box created around it, the version of the object doesnt matter here. The second step involves using a generalized image classification model thats been pretrained on generalized data (VGG, etc.) and a vector search engine/vector database. You would start by using the image classification model to generate vector embeddings (https://frankzliu.com/blog/understanding-neural-network-embeddings) of all the different versions of the object. The more ground truth images you have, the better, but it doesn't require the same amount as training a classifier model. Once you have your versions of the object as embeddings, you would store them in a vector database (for example Milvus: https://github.com/milvus-io/milvus). Now whenever you want to detect the object in an image you can run the image through the detection model to find the object in the image, then run the sliced out image of the object through the vector embedding model. With this vector embedding you can then perform a search in the vector database, and the closest results will most likely be the version of the object. Hopefully this helps with the general rundown of how it would look like. Here is an example using Milvus and Towhee https://github.com/towhee-io/examples/tree/3a2207d67b10a246fd6a1654adf173d9902c3cf8/image/reverse_image_search. Disclaimer: I am a part of those two open source projects.

#Vector Databases #Retrieval Augmented Generation #Search Engine 34 social mentions

Discuss: Ask HN: Any good self-hosted image recognition software?

Related Posts

Best Jasper AI Alternatives & Competitors in 2024 [Free/Paid]

addlly.ai // about 1 month ago

Best 5 AI Chatbots of 2024

chat-data.com // 4 months ago

5 Top Cryptocurrency Exchange APIs for Developers

bitcoinist.com // 9 months ago

Comparison of AI Video Translation Tools

videodub.io // 3 months ago

9 Best AI Resume Builders: Simplify Your Job Search With AI

rareconnections.io // 7 months ago

7+ Best AI-Powered Resume Builders For 2024

novoresume.com // 4 months ago