-

The easiest way to run large language models locallyPricing:

- Open Source

Go to https://ollama.com/ and download Ollama, then install it on your machine.

#AI #Developer Tools #LLM 144 social mentions

-

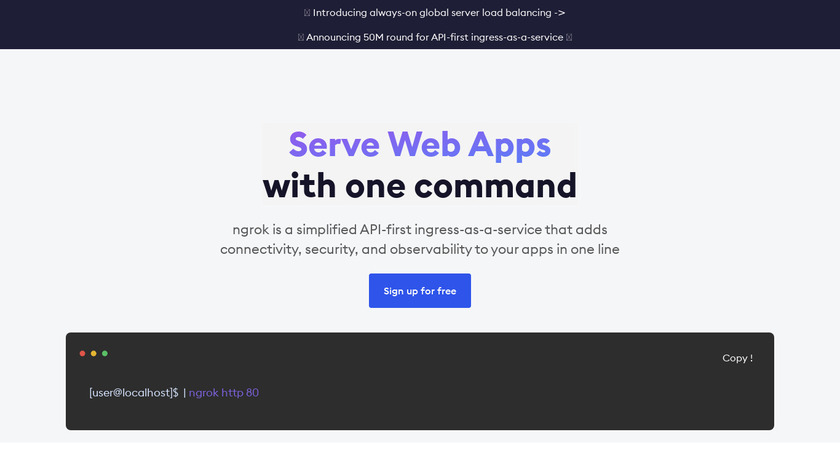

ngrok enables secure introspectable tunnels to localhost webhook development tool and debugging tool.Pricing:

- Open Source

Go to https://ngrok.com/ and download ngrok, then install it on your machine. Then set up ngrok.

#Testing #Localhost Tools #Webhooks 400 social mentions

Discuss: Use Local LLM with Cursor

Related Posts

Top Free Tools to Make AI Spokesperson Videos Instantly

videobytes.ai // 7 days ago

Which Is the Best Tool for AI Video Generating: CapCut or Videobytes AI?

videobytes.ai // 16 days ago

Can You Monetize AI-Generated Videos on YouTube? A Complete Guide for 2025

videobytes.ai // 21 days ago

What are the 10 best platforms for making social media videos without showing your face?

videobytes.ai // 24 days ago

Top Free Faceless Video Generator Tools Without Watermark (2025)

videobytes.ai // 27 days ago

Smax AI vs. Chatfuel: A Detailed Comparison for Business Leaders Seeking a Chatbot Solution

smax.ai // 2 months ago