-

Apache Spark is an engine for big data processing, with built-in modules for streaming, SQL, machine learning and graph processing.Pricing:

- Open Source

Apache Spark is an open-source and flexible in-memory framework which serves as an alternative to map-reduce for handling batch, real-time analytics and data processing workloads. It provides native bindings for the Java, Scala, Python, and R programming languages, and supports SQL, streaming data, machine learning and graph processing. From its beginning in the AMPLab at U.C Berkeley in 2009, Apache Spark has become one of the key big data distributed processing frameworks in the world. Spark is also fast, flexible and developer-friendly.

#Databases #Big Data #Big Data Analytics 72 social mentions

-

Keboola is a next-gen data platform. It simplifies and accelerates data engineering, so companies get better results from their data operations.Pricing:

- Open Source

- Freemium

- Free Trial

Keboola is a Software-as-a-Service (SaaS) data operation platform, which covers the entire data pipeline operational cycle. From ETL (extract-transform-load) jobs to orchestration and monitoring, Keboola provides a holistic platform for data management. The architecture is designed modularly as plug-and-play allowing for greater customization. In addition to all of the expected features, Keboola surprises with its advanced take on the data pipeline, offering one-click deployments of digital sandboxes, machine learning out-of-the-box features and more. The engineering behind Keboola is extraordinary. It is resilient, scales effortlessly along with user’s data needs and utilizes advanced security techniques to keep the data safe.

#Data Integration #ETL #Data Extraction 2 social mentions

-

Open-source software for reliable, scalable, distributed computingPricing:

- Open Source

Hadoop is an open-source framework that allows to store and process big data in a distributed environment across clusters of computers using simple programming models. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Rather than relying on hardware to deliver high-availability, the library itself is designed to detect and handle failures at the application layer, so delivering a highly-available service on top of a cluster of computers, each of which may be prone to failures. It can handle big data volumes, performing complex transformations and computations in no time. Over years, other capabilities have been built on top of Hadoop to make it truly effective software for real-time processing.

#Databases #Relational Databases #NoSQL Databases 26 social mentions

-

Apache Kafka is an open-source message broker project developed by the Apache Software Foundation written in Scala.Pricing:

- Open Source

Apache Kafka is also a leading technology that streams real-time data pipeline. It is an open-source distributed streamline platform which is useful in building real-time data pipelines and stream processing applications. Enterprises use Apache Kafka for the management of peak data ingestion loads and also as a big data message bus. The capabilities of Apache Kafka to manage peak data ingestion loads are a unique and formidable advantage over common storage engines. The general application of Kafka is in the back end for the integration of microservices. Besides, it can also support other real-time data streaming portals such as Flink or Spark. Kafka can also send data to other platforms for streaming analytics for the purpose of analysis.

#Stream Processing #Data Integration #ETL 146 social mentions

-

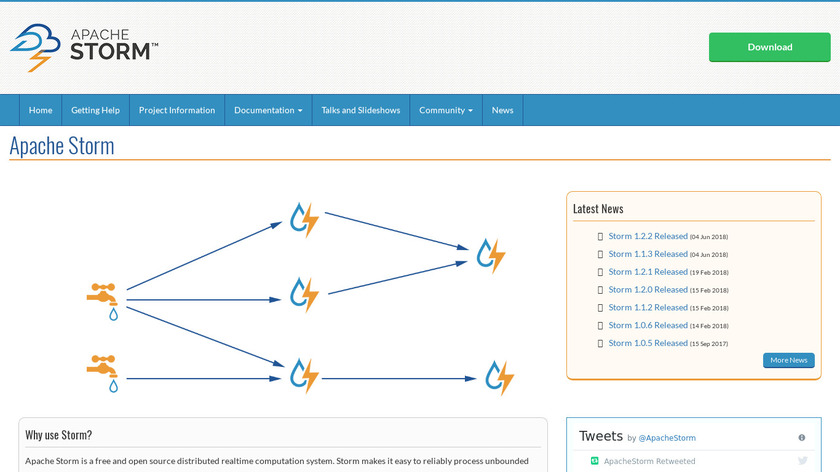

Apache Storm is a free and open source distributed realtime computation system.Pricing:

- Open Source

Apache Storm is an open-source distributed real-time computational system for processing data streams. Similar to what Hadoop does for batch processing, Apache Storm does for unbounded streams of data in a reliable manner. Built by Twitter, Apache Storm specifically aims at the transformation of data streams. Storm has many use cases like real-time analytics, online machine learning, continuous computation, distributed RPC, ETL and more. It integrates with the queueing and database technologies that people already have. An Apache Storm topology consumes streams of data and processes those streams in arbitrarily complex ways, repartitioning the streams between each stage of the computation however needed.

#Big Data #Data Warehousing #Data Dashboard 11 social mentions

Discuss: 5 Best-Performing Tools that Build Real-Time Data Pipeline

Related Posts

Best ETL Tools: A Curated List

estuary.dev // 6 months ago

The Ultimate Guide to Choosing the Right Data Transformation Tool for Implementation & Onboarding Teams

dataflowmapper.com // 8 months ago

The Best MuleSoft Alternatives [2024]

exalate.com // over 1 year ago

Top MuleSoft Alternatives for ITSM Leaders in 2025

oneio.cloud // 9 months ago

Top 6 Mulesoft Alternatives & Competitors in 2024

astera.com // about 1 year ago

Data Integration (Dec 25)

saashub.com // 9 months ago