Best message queue for cloud-native apps

If you take the time to sort out the history of message queues, you will find a very interesting phenomenon. Most of the currently popular message queues were born around 2010. For example, Apache Kafka was born at LinkedIn in 2010, Derek Collison developed Nats in 2010, and Apache Pulsar was born at Yahoo in 2012. What is the reason for this?

Are Free, Open-Source Message Queues Right For You?

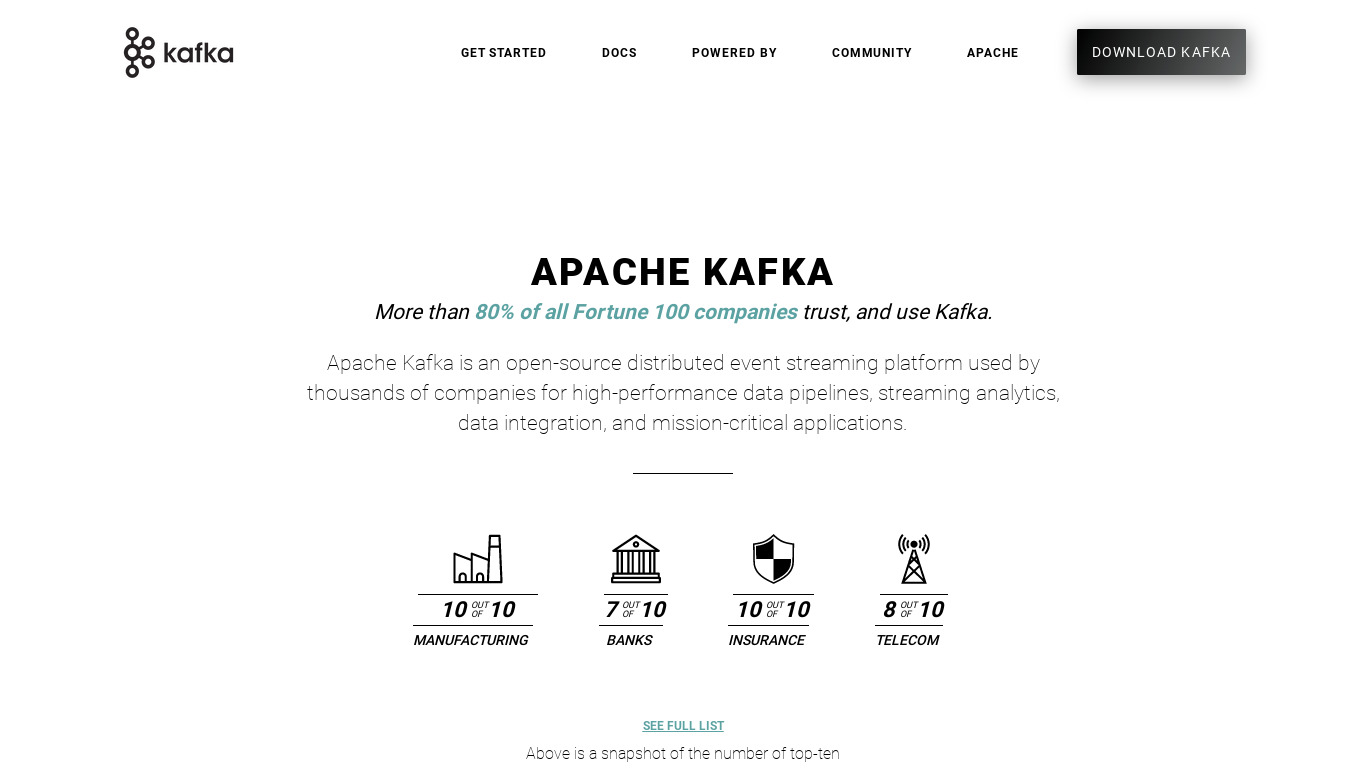

Apache Kafka is a highly scalable and robust messaging queue system designed by LinkedIn and donated to the Apache Software Foundation. It's ideal for real-time data streaming and processing, providing high throughput for publishing and subscribing to records or messages. Kafka is typically used in scenarios that require real-time analytics and monitoring, IoT applications, log aggregation, and event sourcing.

10 Best Open Source ETL Tools for Data Integration

It is difficult to anticipate the exact demand for open-source tools in 2023 because it depends on various factors and emerging trends. However, open-source solutions such as Kubernetes for container orchestration, TensorFlow for machine learning, Apache Kafka for real-time data streaming, and Prometheus for monitoring and observability are expected to grow in prominence in 2023. Specific tool needs may change...

11 Best FREE Open-Source ETL Tools in 2024

Apache Kafka is an Open-Source Data Streaming Tool written in Scala and Java. It publishes and subscribes to a stream of records in a fault-tolerant manner and provides a unified, high-throughput, and low-latency platform to manage data.

NATS vs RabbitMQ vs NSQ vs Kafka | Gcore

One of the biggest drawbacks of Apache Kafka is the architecture that makes it so efficient. The combination of brokers and ZooKeeper nodes, along with numerous configurable options, can make it difficult and complex for new teams to set up and manage without encountering performance issues or data loss. However, Kafka can work without ZooKeeper after 3.3.1 version using Kraft improving performance.

6 Best Kafka Alternatives: 2022’s Must-know List

With a robust suite of components based on communities like Apache Kafka and ActiveMQ, Red Hat AMQ offers a secure and lightweight solution message delivery and one of the best Kafka Alternatives. Compared to most streaming tools, Red Hat AMQ has faster execution and offers a flexible messaging tool that allows instant communication. Consequently, Red Hat AMQ effectively meets organizational needs and integrates...

Top 15 Kafka Alternatives Popular In 2021

Red Hat AMQ is a powerful suite of components that depend upon communities like Apache Kafka and Apache ActiveMQ to offer a secure and lightweight solution. It is fast in execution and is a flexible messaging tool through which instant delivery of information can be done. It offers a quick response to organizational needs and integrates apps seamlessly across the enterprise.

Top 10 Popular Open-Source ETL Tools for 2021

Apache Kafka is an Open-Source Data Streaming Tool written in Scala and Java. It publishes and subscribes to a stream of records in a fault-tolerant manner and provides a unified, high-throughput, and low-latency platform to manage data.

Top ETL Tools For 2021...And The Case For Saying "No" To ETL

Apache Kafka is an open source platform written in Scala and Java. It provides a unified, high-throughput, low-latency platform for managing real-time data. Kafka publishes and subscribes to a stream of records in a fault-tolerant way, immediately as they occur.

5 Best-Performing Tools that Build Real-Time Data Pipeline

Apache Kafka is also a leading technology that streams real-time data pipeline. It is an open-source distributed streamline platform which is useful in building real-time data pipelines and stream processing applications. Enterprises use Apache Kafka for the management of peak data ingestion loads and also as a big data message bus. The capabilities of Apache Kafka to manage peak data ingestion loads are a unique...